All You Need to Know About Removing Duplicate Rows / De-duplication from Your Dataset

Introduction

De-duplication is the process of identifying and removing duplicate records from a dataset. It can be in your excel files, or while analyzing data in python, or in the datasets while writing SQL queries. Here are the steps you can follow to do de-duplication in data analysis

- Define what constitutes a duplicate: Before you start de-duplication, it’s important to define what constitutes a duplicate. For example, if you’re analyzing customer data, you might define a duplicate as a record with the same name, email address, and phone number.

- Sort the data: Once you’ve defined what constitutes a duplicate, sort the data by the fields you’re using to identify duplicates. This will make it easier to identify and remove duplicates.

- Identify duplicates: Use software tools such as Excel, R, or Python, to identify duplicate records in your data set. You can use a variety of techniques for identifying duplicates, such as removing all exact duplicates, comparing a combination of fields, or using fuzzy matching.

- Decide which records to keep: Once you’ve identified duplicates, decide which records to keep. For example, you might decide to keep the record with the most complete information or the record with the most recent date.

- Remove duplicates: After deciding which records to keep, remove the duplicate records from your data set. This could involve deleting records or merging duplicate records into a single record.

- Validate results: Finally, validate your de-duplication results to ensure that you have removed all duplicates from your dataset.

By following these steps, you can effectively de-duplicate your dataset and ensure that your analysis is based on accurate and reliable data.

Process

Remove Duplicate Rows in Excel:

In Excel removing duplicate rows can be achieved using both the “Remove Duplicates” feature and the “Conditional Formatting” feature.

Using the “Remove Duplicates” feature

- Open Excel and open the worksheet that contains the data from which you want to remove duplicates.

- Select the range of cells that contain the data from which you want to remove duplicates.

- Go to the “Data” tab in the ribbon menu and click on “Remove Duplicates”.

- In the “Remove Duplicates” dialog box, check the boxes next to the columns that contain the data that you want to check for duplicates. In this example, we’ll check the box next to the “Name” column.

- Click “OK” to remove the duplicate values.

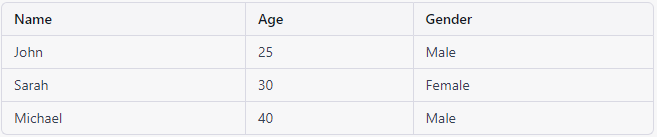

Before removing duplicates:

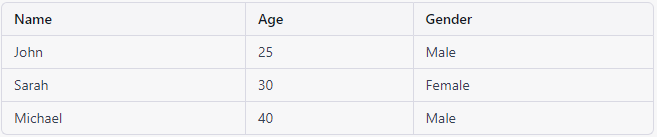

After removing duplicates:

Using the “Conditional Formatting” feature

- Open Excel and open the worksheet that contains the data from which you want to remove duplicates.

- Select the range of cells that contain the data from which you want to remove duplicates.

- Go to the “Home” tab in the ribbon menu and click on “Conditional Formatting”.

- Select “Highlight Cells Rules” and then “Duplicate Values”.

- In the “Duplicate Values” dialog box, select the formatting that you want to apply to the duplicate values. In this example, we’ll select “Light Red Fill with Dark Red Text”.

- Click “OK” to apply the formatting to the duplicate values.

- Manually delete the highlighted duplicate values.

Before removing duplicates:

After removing duplicates:

Remove Duplicate Rows Using Python:

We are going to use the same data to remove duplicate values in Python using the Pandas library:

import pandas as pd

# create a dataframe

df = pd.DataFrame({

'Name': ['John', 'Sarah', 'John', 'Michael', 'Sarah'],

'Age': [25, 30, 25, 40, 30],

'Gender': ['Male', 'Female', 'Male', 'Male', 'Female']

})

# remove duplicates

df.drop_duplicates(inplace=True)

# print the dataframe

print(df)Output:

Name Age Gender

0 John 25 Male

1 Sarah 30 Female

3 Michael 40 MaleRemove Duplicate Rows Using SQL:

While using SQL there are several ways you can use the deduplication process depending on the data and circumstances. Here are a few examples:

Using Distinct :

Assuming you have a table named customers with the following data:

You can use the DISTINCT keyword in a SELECT statement to remove duplicate rows from a table. Here's the SQL query to do this:

SELECT DISTINCT name, email

FROM customers;Output:

As you can see, the query returns only unique combinations of name and email, removing the duplicate rows.

You can also use the GROUP BY clause to remove duplicate rows based on specific columns. Here's an example query:

SELECT name, email

FROM customers

GROUP BY name, email;Output:

In this example, we grouped the rows by the name and email columns, which resulted in removing the duplicate rows.

Using Group by and aggregate function:

Assume you have a table named orders with the following data:

If you run the following query to get distinct product and price:

SELECT DISTINCT product, price

FROM orders;The output you will get is:

As you can see, the output still contains duplicates for the Pen and Notebook products, even though the DISTINCT keyword was used.

To remove all duplicates, you can use the GROUP BY clause along with the SUM function to get the total sales of each product. Here's an example query:

SELECT product, price, SUM(price) as total_sales

FROM orders

GROUP BY product, price;The output you will get is:

As you can see, the output now correctly shows the total sales for each product, without any duplicate rows.

Using ROW_NUMBER() function:

Let’s use more dynamic and popular technic for de-duplication using the ROW_NUMBER() function:

Assume we have the same table named orders with the following data:

If you want to remove duplicates based on the product column, you can use the following query:

SELECT order_id, customer_id, product, price

FROM (

SELECT order_id, customer_id, product, price,

ROW_NUMBER() OVER(PARTITION BY product ORDER BY order_id) AS row_num

FROM orders

) AS tmp

WHERE row_num = 1;This query uses the ROW_NUMBER() function with the PARTITION BY clause to assign a unique number to each row within a group of rows that have the same value in the product column. The ORDER BY clause specifies the order in which the numbers are assigned. The outer query then selects only the rows with a row_num value of 1, which correspond to the first occurrence of each unique product value.

The output you will get from this query is:

As you can see, the output correctly removes the duplicate rows for the Pen and Notebook products.

Using ROW_NUMBER() function with QUALIFY clause :

Furthermore, we can also use the QUALIFY function in SQL to remove duplicates based on a specific column. The QUALIFY clause is used to filter the results of a query based on a condition that includes a window function.

Here’s an example of how to use the QUALIFY function to remove duplicates from the same orders table based on the product column

SELECT order_id, customer_id, product, price

FROM orders

QUALIFY ROW_NUMBER() OVER(PARTITION BY product ORDER BY order_id) = 1;This query uses the same ROW_NUMBER() function with the PARTITION BY and ORDER BY clauses to assign a unique number to each row within a group of rows that have the same value in the product column. The QUALIFY clause then filters the rows based on the condition that the row_num value is equal to 1 for each group.

The output you will get from this query is the same as the previous example:

Conclusion:

Each of these methods has its own advantages and disadvantages, and the best method to use will depend on the specific requirements of your analysis and the characteristics of your data. Some methods may be faster or more efficient for large datasets, while others may be easier to use for those who are less familiar with programming or SQL. It’s important to consider the pros and cons of each method and choose the one that best meets your needs.